traditional ML:optimization: Adversarial ML: game theory:

Generative Adversarial Network(GAN)

Generative Models

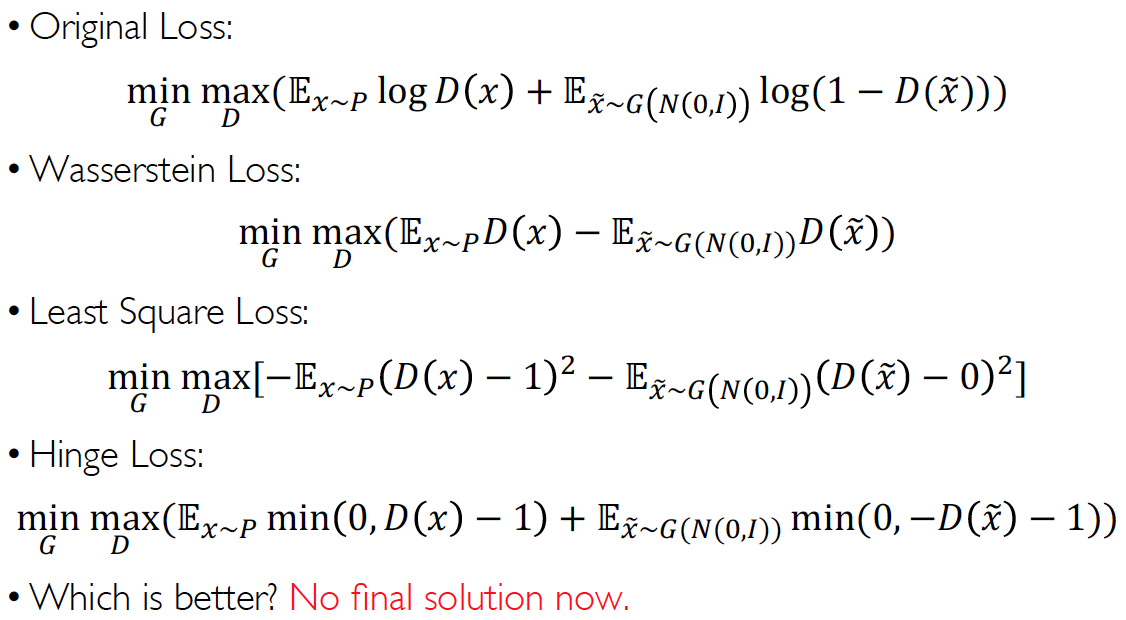

General idea of GAN: for some distribution metric D,

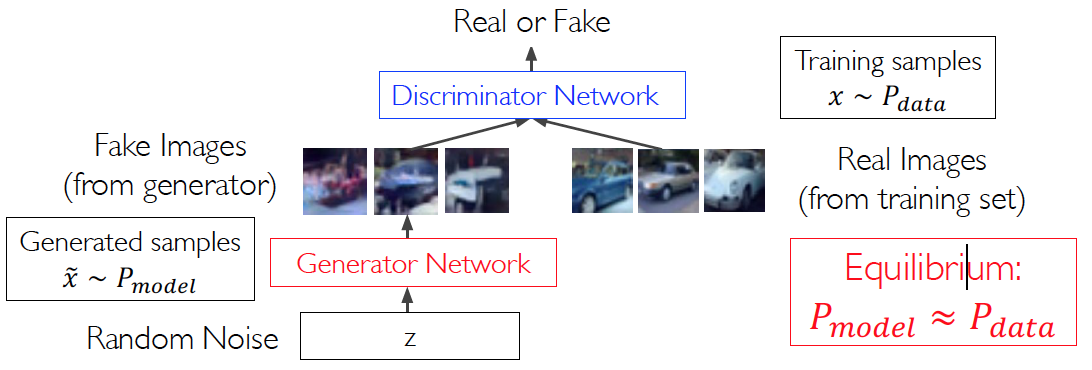

Training GANs: Two-Player Game

- Generator network (G)

- try to fool the discriminator by generating real-looking images(complex map)

- DIscriminator network(D)

- try to distinguish between real and fake images (two-class classification model)

-

Train jointly in minimax objective function: where: D(x) for real data x, D(G(z)) for generated fake data G(z)

- max D s.t. D(x) is close to 1 (real) and 𝐷(𝐺(𝑧)) is close to 0 (fake)

- gradient ascent on discriminator D

- small gradient for good samples, large gradient for bad samples

- min G s.t. D(G(z)) is close to 1 (D is fooled into thinking G(z) is real)

- gradient descent on generator G

- small gradient for bad samples, large gradient for good samples

- optimizing generator objective does not work well dut to gradient vanishing

- Two-player game is matching two empirical distributions and

Wasserstein GAN(WGAN)

If for all function f, then P=Q

Measure distribution distance by dual form Wasserstein:

Using netwrok D to parametrize Lipchitz function class :

Wasserstein GAN:

Adversarial Training

find small noise that can keep appearance of image and cause mistake in network

Reason for adversarial example

- overfitting

- local linearity(ReLU)

- If a sample is near classification surface while the local surface is not nonlinear enough to fit it, this sample is exposed to the classification surface and an advesarial example can be created nearby

Attacking Methods

White-Box Attack

- bounded attack method

- targeted attack

- untargeted attack

- FGSM

- PGD

Black-Box Attack

use transferability to attack that model